How we found a cheap way of increasing RAM size for InvariVision

As the video database of InvariVision grew, searching for similar videos in our system required more RAM and processing time. To solve this, we could’ve added more RAM. However, instead of doing that, we chose the cheaper and more practical alternative of unloading a portion of the RAM onto an SSD.

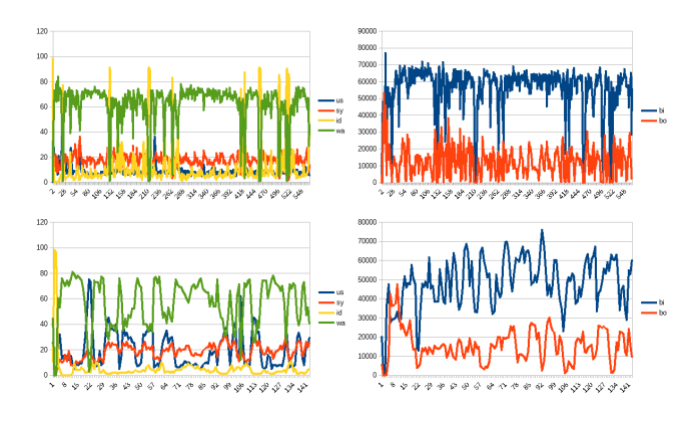

To better understand the problem we added 2.4GB search cores one by one while monitoring the system load. We kept adding cores until most of them were loaded out of RAM. We knew that SSD works noticeably slower than conventional RAM. Besides that, the more we would use swap – the slower the entire system would become. As a result, we needed to optimize the data handling workflow – make the working data set small enough to fit it into RAM and then swap the worksets as little as possible.

The algorithm that optimizes branches in search index was the most arbitrary in addressing the memory. To get rid of this problem we implemented a “dirty flag”. After modifying a branch of the search tree, we marked it as “dirty”, so that the algorithm would only be optimizing the modified branches as opposed to working with the entire tree at once.

To reduce the working data set, we removed all unnecessary data from the search structures and grouped all data in the order that it is accessed in. This allowed us to reduce the memory usage of our search algorithm by 20%. On top of that, accessing data became easier, and it was now stored more compactly. We managed not only to decrease the RAM usage but also achieved a twofold increase of core data writing speed.

There was only one more problem to solve before switching our system to use swap. The thing is, a malfunction in any of the SSDs in RAID could lead the application or even the entire system to crash. There is no way of making the system entirely safe from such incidents. The only solution we had was to minimize the damage to the system if it ever lost data or had its software damaged.

The system is the most vulnerable to crashes and power loss when it is writing core data to the disk. In that case, we would have to restore the data from a backup and re-upload the videos that were added after the last backup. In all other cases, a crash would only result in losing the videos that were being worked on when the SSD malfunctioned. To minimize the damages done by such a malfunction we added automatic restoration of all the requests that were queued when the system crashed. This way, after recovering, the system would continue working on the same set of requests it was processing during the crash.

We started working on the optimization of RAM usage on the 21st of September, 2016 and by the December 13th the system was already working using swap. In the future, we are planning to implement our specialized method of addressing the SSD that will be more efficient than the system swap, as it will take into account the specificities of how our search cores work.